As a part of the series of designing a market data feed handler system, this is the best usecase I found on internet.

A High-Frequency Trading (HFT) firm needs to implement a robust market data feed handler system to support its trading and risk management operations. The system must handle real-time data ingestion, processing, and distribution to various components within the firm to make split-second trading decisions and manage risk effectively.

So what are the components involved:

Components Involved:

- Market Data Ingestion Service: Captures real-time data from multiple exchanges and data providers.

- Order Management System (OMS): Executes trades based on the processed data.

- Risk Management System: Monitors and manages risk exposure in real-time.

- Database: Stores historical data for analysis and back-testing.

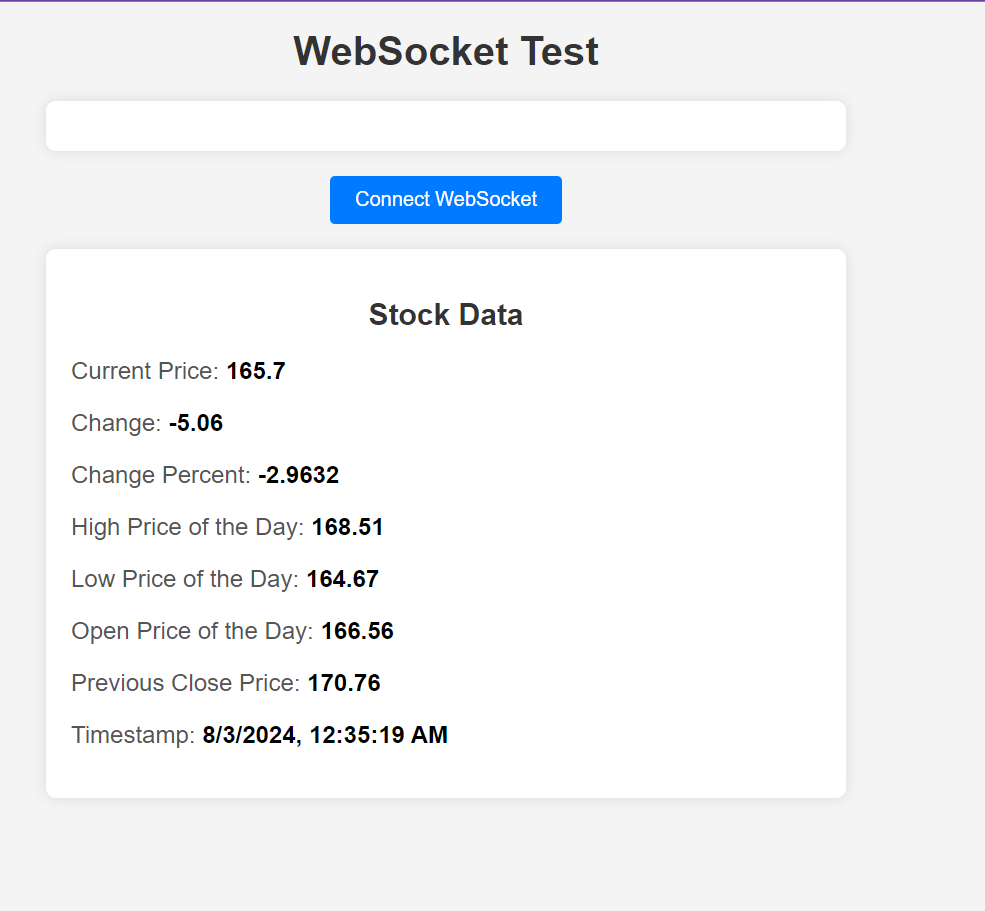

- Dashboard: Visualizes real-time data and system status for traders and risk managers.

As a mean of entire scenario, I added the first part only today,

Basically, I had to identify the key data points while fetching the data, that the trading algorithms need to make decisions.

Here are a list of data points I added to the kafka structure which fetches data.

c: Current priceh: High price of the dayl: Low price of the dayo: Open price of the daypc: Previous close pricet: Timestamp of the last update

The valuation of the variate data points are:

c(Current Price): Crucial for real-time trading decisions and current portfolio valuation.h(High Price of the Day): Useful for identifying resistance levels and setting sell targets.l(Low Price of the Day): Useful for identifying support levels and setting buy targets.o(Open Price of the Day): Provides context for intraday trading strategies.pc(Previous Close Price): Useful for calculating daily price changes and volatility.t(Timestamp): Ensures data timeliness and can be used for logging and auditing purposes.

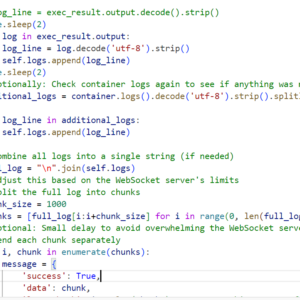

As mentioned earlier in previous post, I had a kafka service to capture data and another to broad cast to the websocket which could be called on UI to show reatime data as it is fetched.

Here is a snipeet of the same

Above is how the real data is captured from the finhubb website to this application using kafka pub-sub.

That’s it done so far.

Next what I am think is to integrate this captured data to Trading and Risk Management Systems. This can be done by implementing trading algorithms.

Here is a plan to implement the same.

Integrating with Trading and Risk Management Systems

- Trading Algorithms:

- Will Use

current_price(c) to make buy/sell decisions. - Will Use

high_price(h) andlow_price(l) to set stop-loss and take-profit levels. - Will Use

open_price(o) andprevious_close_price(pc) to analyze price movements and trends.

- Will Use

- Risk Management:

- Will Monitor

current_priceto assess real-time exposure. - Will Compare

high_price,low_price, andcurrent_priceto historical data to evaluate market volatility. - Will Use

timestamp(t) to ensure the data’s freshness and accuracy for risk calculations.

- Will Monitor

- Data Storage and Analysis:

- Will Store the captured data points in a time-series database for historical analysis and back-testing.

- Will Regularly update the database with new data to maintain an accurate record of price movements.

I am aware there are other kind of data in market data handler but I intend to conver real time data processing first. The above is a high level stuff. will granualarize the same to break these into coding block.

I will ofcourse document what even I am thinking, lest I am prone to forgetfulness.

So, implement this I have to fetch the historical data as well along with real time data. Need historic data to calculate the short-term and long-term moving averages based on historical data.

I will be more comprehensive in the next post about this, but what I understood so far from this research is that based on the current short term ma and current long term ma that comes from the historic data, I have to generate the buy and sell signal. If current_short_ma > current_long_ma then buy else sell. Based on historic data we have to process the real time current price that comes from real time data.

Stop-loss and take-profit levels has also to be set after this.

This is all over the business logic, but I still have to organize the same in micrservice. which microservice to choose. Consumer microservice would be ideal one where I have the real time data available and I can call another endpoint on the cron to get the historic data. but do I need to store the historic data in the database? that’s the quest that haunts me. I believe I need to because that would be useful to implement various trading algorithms and risk management models.

I think once implement various trading algorithms I can understand better.