So, lately I was deploying one of a shopify app (under construction ) to azure k8 cluster, alas, I got this error- No Device space left and Jenkins was down (the page was not loading). Instantly I knew that I got to do some disk space management and clean up old builds or may some unwanted files.

But how to do that? That was the question I was asking myself. I started with visualizing my set up first. My set up for the alignment of the servers is simple. I had created a resource group and under that there are many thing ranging some storage disks to virtual machine to Kubernetes cluster.

Here I had installed the Jenkins server over the virtual machine, so readily I got to know that the disk space was running low, hence not able to acquire enough space to load the start up files for the server to start.

sudo systemctl start/stop jenkins

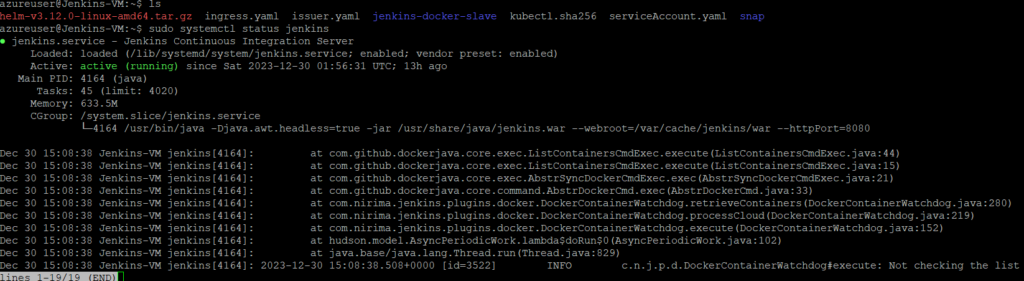

sudo systemctl status jenkins

The above command are indispensable to know the active status of the server. Running the same commands would also give the error why it is not starting.

The solution is simple.

- Find the which machine you are using for the VM or as host

- Find what are file you want to remove

- Find which subdirectory which has highest inodes

- Store build artifactory outside Jenkins in some private registry

In my case the machine was a linux machine so here are the commands I used to clear the filesystem on the virtual machine. Below are the steps to clean disk space of a linux distro.

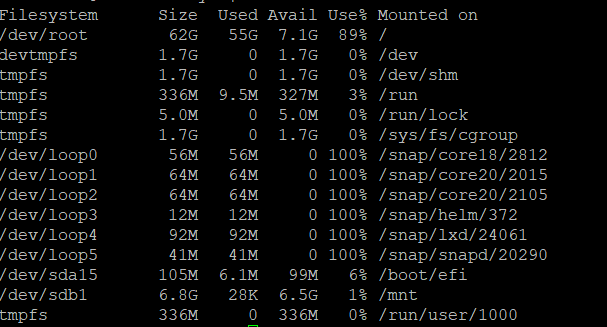

Start by figuring out which partition is full by using this command. It will give results like this.

Since we want to free space inside the jenkins server, we have to run the below command in the Jenkins home directory

du -h /var/lib/jenkins As you can see there are subdirectories as well.. So one can use the below command you peep into what's inside the subdirectories as well du -h --max-depth=1 / | sort -h 2> /dev/null Additionally you can use this command to list the biggest 10 directories. du -h --max-depth=1 2> /dev/null | sort -hr | tail -n +2 | head

We see there are cache related subdirectories, plugins related subdirectories, job related subdirectories and other stuffs as well.

Out of this we want to clear the cache to free up spaces. As you could this folder can using the last command as well.

In order to delete cache stuffs you can use this command

sudo rm -rf /var/lib/jenkins/cache/*

Here is the result after I deleted the cache. You dont see cache stuffs any more

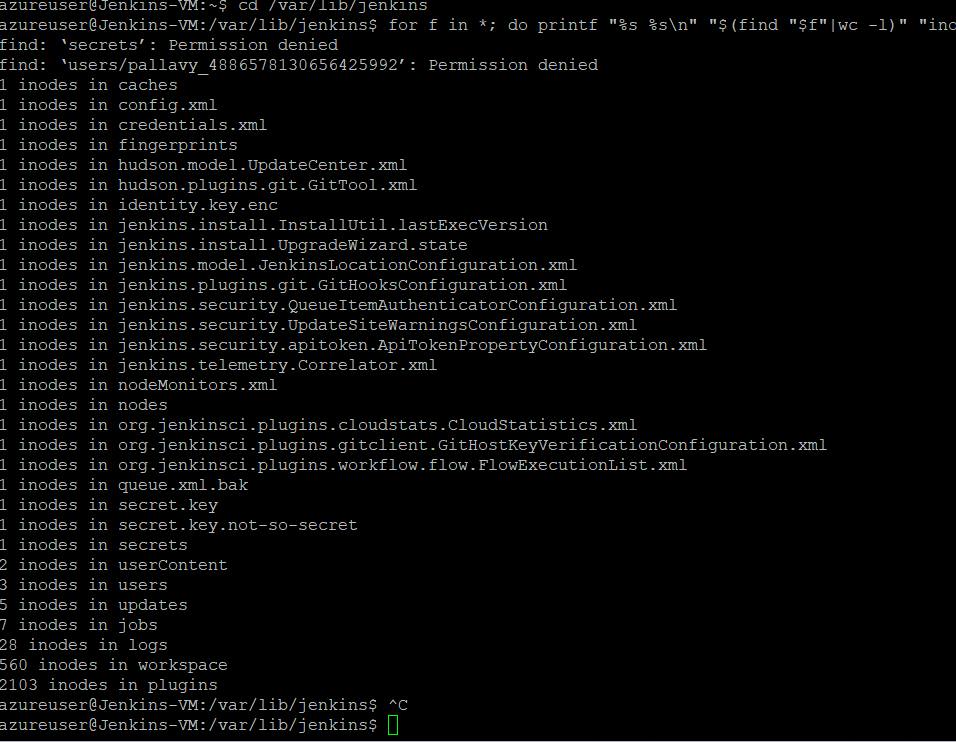

Now you can cd into Jenkins home to see the inodes status for cache.

for f in *; do printf "%s %s\n" "$(find "$f"|wc -l)" "inodes in $f";done|sort -n

Then you can cd into specific directory and check the inodes in there.

Apart from cache you can use the above mentioned strategy to clear logs or temp folder if any. These three folder you have to target to check free space.

/var/lib/jenkins/logs

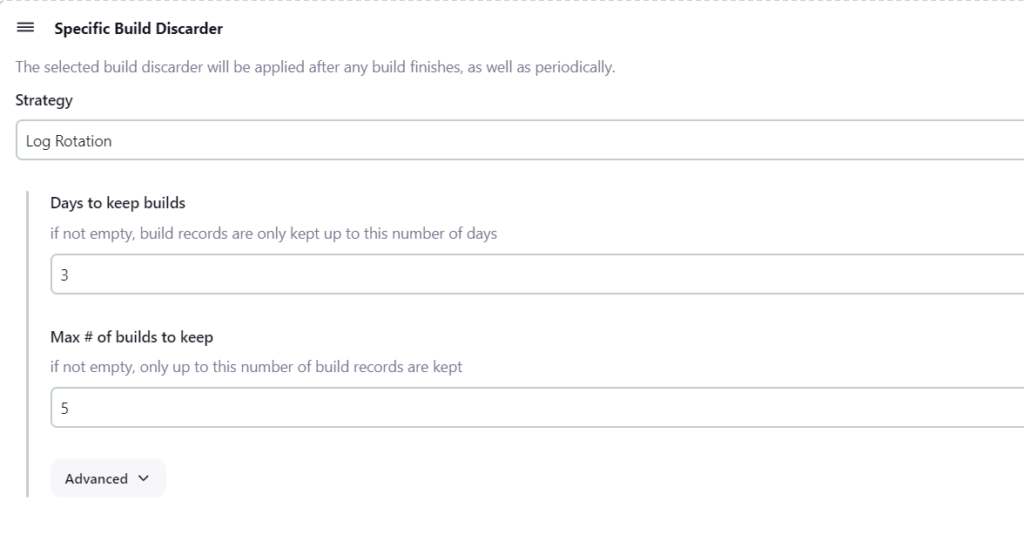

One more thing you can do is configure Global Build Discarders so that older builds would be discarded.

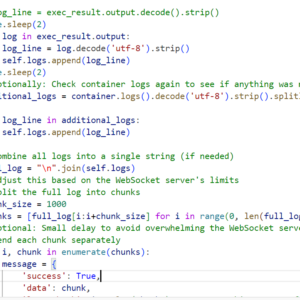

Plus you can use this script in the script console to eradicate builds.

// NOTES:

// dryRun: to only list the jobs which would be changed

// daysToKeep: If not -1, history is only kept up to this day.

// numToKeep: If not -1, only this number of build logs are kept.

// artifactDaysToKeep: If not -1 nor null, artifacts are only kept up to this day.

// artifactNumToKeep: If not -1 nor null, only this number of builds have their artifacts kept.

import jenkins.model.Jenkins

Jenkins.instanceOrNull.allItems(hudson.model.Job).each { job ->

if (job.isBuildable() && job.supportsLogRotator() && job.getProperty(jenkins.model.BuildDiscarderProperty) == null) {

println "Processing \"${job.fullDisplayName}\""

if (!"true".equals(dryRun)) {

// adding a property implicitly saves so no explicit one

try {

job.setBuildDiscarder(new hudson.tasks.LogRotator ( daysToKeep, numToKeep, artifactDaysToKeep, artifactNumToKeep))

println "${job.fullName} is updated"

} catch (Exception e) {

// Some implementation like for example the hudson.matrix.MatrixConfiguration supports a LogRotator but not setting it

println "[WARNING] Failed to update ${job.fullName} of type ${job.class} : ${e}"

}

}

}

}

return;Then you can configure a third party tool to store build artifactories.

For instance I configured Jfrog for push the zip files of the artifactories to the local repositories of Jfrog.

Here is a quick link for the instant installation. Make sure you have 4gb ram for 4 cpu core for this installation on the cloud host.

For integrating to jenkins all you have to do is use the access token (which you can generate in jfrog access token ui) and supply it to establish the connection on the jenkins server. Then you can configure the jfrog cli on the pipeline script and upload the artifactory.

Apart from this make sure you clean the workspace after each build to get rid of this error.

And you can use this command to clean the main directory to free spaces.

sudo apt autoremove && sudo apt autoclean && sudo apt clean

Hope this helps!