So, workflow that I have implemented is quite nested. I am just thinking from where could I start. I have given essentials for understanding this code flow in my previous posts.

This post talks about implementing the same in python.

Let me start with what the user would be allowed to do first.

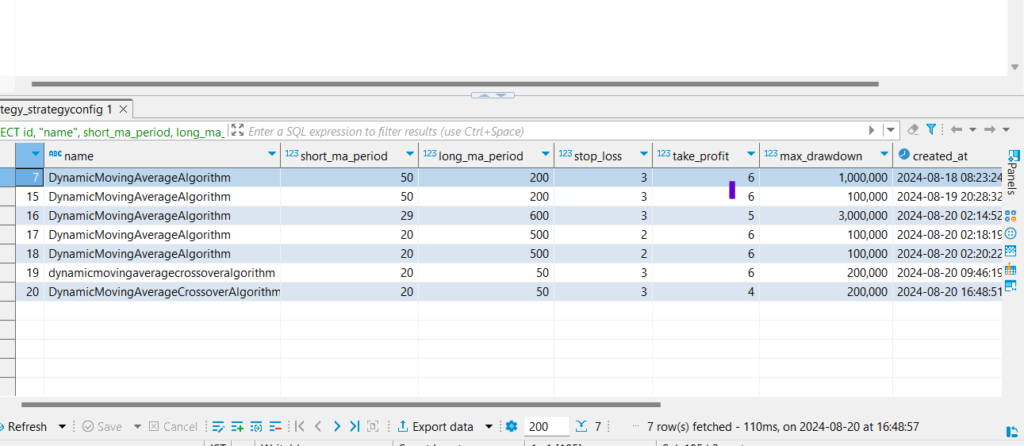

First page shows that the user would be able to create the strategy that would get stored in the db.

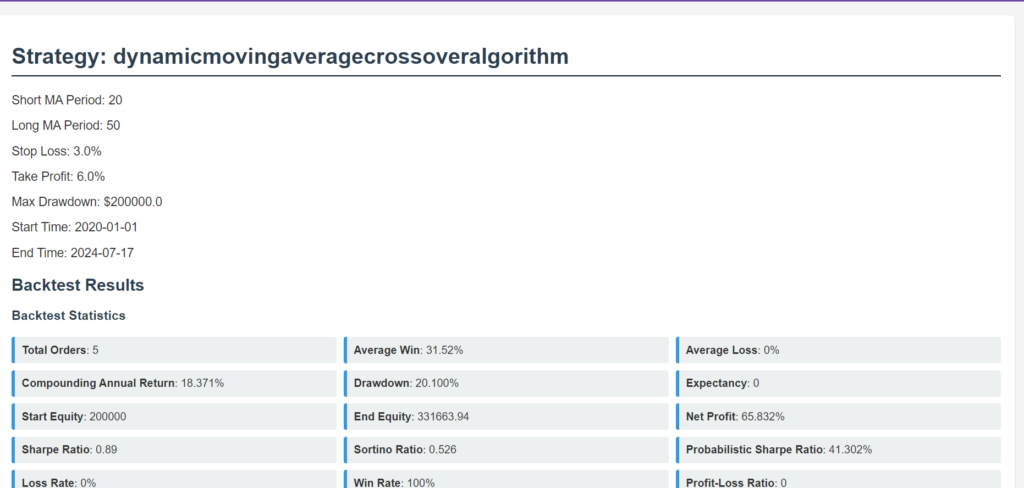

we would access the details of a specific strategy using the ID. and visit this page.

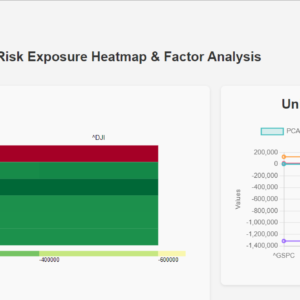

I give a button here which would trigger the backtesting at the backend and give this result. By using this the user would be able take a decision.

Coding flow at the backend

Since I am more involved in backend, I would give a detailed flow, for frontend have maintained a minimalistic code base.

I configured couple of endpoints.

This is first triggered endpoint.

def run_backtest(request):

try:

# Parse the incoming request to get the strategy_id

data = json.loads(request.body)

print(f"daata----------------------->{data}")

strategy_id = data.get('strategy_id')

if not strategy_id:

return JsonResponse({'success': False, 'error': 'strategy_id is required'}, status=400)

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

try:

result = loop.run_until_complete(run_backtest_ws(strategy_id))

finally:

loop.run_until_complete(loop.shutdown_asyncgens())

loop.close()

return JsonResponse({'success': True, 'message': result})

except Exception as e:

return JsonResponse({'success': False, 'error': str(e)}, status=500)async def run_backtest_ws(strategy_id):

uri = "ws://tradingplatform:8010/ws/backtest/"

try:

async with websockets.connect(uri) as websocket:

# Send the strategy ID to Service B

await websocket.send(json.dumps({'strategy_id': strategy_id}))

# Buffer for storing message chunks

message_buffer = []

# Listen for messages from Service B

while not shutdown_event.is_set():

try:

# Set a timeout for receiving messages

response = await asyncio.wait_for(websocket.recv(), timeout=5.0)

# print(f"Response received: {response}")

resDict = json.loads(response)

print(f"resDict type: {type(resDict)}")

if isinstance(resDict, dict):

if resDict.get("success"):

# Append the chunk to the buffer

message_buffer.append(resDict['data'])

# If it's the last chunk, process the full message

if resDict.get('last_chunk', True):

full_message = ''.join(message_buffer)

# Process the complete message

res = extract_statistics_dict(full_message)

print(f"Processed result: {res}")

return res

else:

print("Update or error:", resDict.get("error"))

else:

print("Response is not a dictionary.")

except asyncio.TimeoutError:

print("No message received within timeout, checking shutdown signal...")

continue

except Exception as e:

print(f"WebSocket communication failed: {str(e)}")

finally:

if not websocket.closed:

await websocket.close()

print("WebSocket connection closed.") Running Async code in Sync View

As we can see in the run_backtest function which is synchronous we want to call an async function run_backtest_ws which is usually not possible. By creating a new event loop, you ensure that asynchronous code can still be executed.

Also, Creating a new event loop isolates this particular asynchronous operation from others. This can prevent potential conflicts with other asynchronous tasks that might be running in the same application. Then in finally we are closing this loop.

Calling the websocket handler

I used websocket because it takes some time to calculate and that’s why didnt want to go from usual requests module. Below is the entire consumer class. This is from the trading-platform service, I am making a websocket call from consumer service to trading-platform service.

class BacktestConsumer(AsyncWebsocketConsumer):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.client = docker.from_env() # Initialize Docker client

self.is_execution_complete = False

self.previous_results = None # To store results of the previous execution

self.logs = []

async def connect(self):

await self.accept()

async def disconnect(self, close_code):

pass

async def receive(self, text_data):

data = json.loads(text_data)

strategy_id = data.get('strategy_id')

if strategy_id:

await self.run_backtest(strategy_id)

async def run_backtest(self, strategy_id):

try:

# Check if the execution is already complete

if self.is_execution_complete:

await self.send_message({

'success': True,

'data': self.previous_results,

'message': 'Backtest already completed, returning previous results.'

})

return

# Get the strategy configuration

strategy_data = await self.fetch_strategy_config(strategy_id)

print(f"strategy_data--------------------------->{strategy_data}")

if not strategy_data:

return

# Generate the config data for the backtest

config_data = self.generate_config_data(strategy_data)

# Write the config.json file to a temporary location

temp_config_path = '/tmp/config.json'

self.write_config(temp_config_path, config_data)

# Copy the config.json into the Docker container

container_name = 'lean-engine'

config_file_path_in_container = '/Lean/config.json'

if not await self.copy_file_to_container(temp_config_path, container_name, config_file_path_in_container):

return

# Run the Lean engine with the updated config.json

if not await self.run_lean_engine(container_name):

return

self.is_execution_complete = True # Set the flag after successful execution

await self.send_message({'success': True, 'data': 'Backtest completed successfully.'})

except Exception as e:

await self.send_message({'success': False, 'error': f'An error occurred: {str(e)}'})

async def fetch_strategy_config(self, strategy_id):

"""Fetch strategy configuration from an external service."""

strategy_url = f"http://tradingplatform:8010/api/strategy/{strategy_id}/"

response = await asyncio.to_thread(requests.get, strategy_url)

if response.status_code == 200:

return response.json()

else:

await self.send_message({'success': False, 'error': 'Strategy not found'})

return None

def generate_config_data(self, strategy_data):

"""Generate the configuration data for the backtest."""

algorithm_name = strategy_data.get('name', 'DynamicMovingAverageAlgorithm')

short_ma_period = strategy_data.get('short_ma_period')

long_ma_period = strategy_data.get('long_ma_period')

max_drawdown = strategy_data.get('max_drawdown')

stock = strategy_data.get('stock')

start_time = strategy_data.get('start_date')

end_time = strategy_data.get('end_date')

backtest_id = str(uuid.uuid4())

return {

"environment": "backtesting",

"algorithm-type-name": algorithm_name,

"algorithm-language": "Python",

"algorithm-location": "Algorithm.Python/DynamicMovingAverageAlgorithm.py",

"data-folder": "/Lean/Data",

"debugging": False,

"debugging-method": "LocalCmdLine",

"log-handler": "ConsoleLogHandler",

"messaging-handler": "QuantConnect.Messaging.Messaging",

"job-queue-handler": "QuantConnect.Queues.JobQueue",

"api-handler": "QuantConnect.Api.Api",

"map-file-provider": "QuantConnect.Data.Auxiliary.LocalDiskMapFileProvider",

"factor-file-provider": "QuantConnect.Data.Auxiliary.LocalDiskFactorFileProvider",

"data-provider": "QuantConnect.Lean.Engine.DataFeeds.DefaultDataProvider",

"object-store": "QuantConnect.Lean.Engine.Storage.LocalObjectStore",

"data-aggregator": "QuantConnect.Lean.Engine.DataFeeds.AggregationManager",

"symbol-minute-limit": 10000,

"symbol-second-limit": 10000,

"symbol-tick-limit": 10000,

"show-missing-data-logs": True,

"maximum-warmup-history-days-look-back": 5,

"maximum-data-points-per-chart-series": 1000000,

"maximum-chart-series": 30,

"force-exchange-always-open": False,

"transaction-log": "",

"reserved-words-prefix": "@",

"job-user-id": "0",

"api-access-token": "",

"job-organization-id": "",

"log-level": "trace",

"debug-mode": True,

"results-destination-folder":f"/Lean/Results/{backtest_id}",

"mute-python-library-logging": "False",

"parameters": {

"ShortMAPeriod": short_ma_period,

"LongMAPeriod": long_ma_period,

"StartDate": start_time,

"EndDate": end_time,

"InitialCash": max_drawdown,

"Stock": stock

},

"python-additional-paths": [],

"environments": {

"backtesting": {

"live-mode": False,

"setup-handler": "QuantConnect.Lean.Engine.Setup.BacktestingSetupHandler",

"result-handler": "QuantConnect.Lean.Engine.Results.BacktestingResultHandler",

"data-feed-handler": "QuantConnect.Lean.Engine.DataFeeds.FileSystemDataFeed",

"real-time-handler": "QuantConnect.Lean.Engine.RealTime.BacktestingRealTimeHandler",

"history-provider": ["QuantConnect.Lean.Engine.HistoricalData.SubscriptionDataReaderHistoryProvider"],

"transaction-handler": "QuantConnect.Lean.Engine.TransactionHandlers.BacktestingTransactionHandler"

}

}

}

def write_config(self, path, data):

"""Helper method to write config to a file."""

with open(path, 'w') as f:

json.dump(data, f, indent=4)

async def copy_file_to_container(self, src_path, container_name, dest_path):

"""Copy a file from the host to a Docker container using a tar archive."""

try:

container = self.client.containers.get(container_name)

# Create a tar archive in memory

tar_stream = io.BytesIO()

with tarfile.open(fileobj=tar_stream, mode='w') as tar:

tar.add(src_path, arcname=os.path.basename(dest_path))

tar_stream.seek(0) # Rewind the file pointer to the start of the stream

# Put the tar archive to the container

container.put_archive(os.path.dirname(dest_path), tar_stream)

return True

except DockerException as e:

await self.send_message({'success': False, 'error': f"Error copying config.json to container: {str(e)}"})

return False

async def run_lean_engine(self, container_name):

"""Run the Lean engine DLL inside the Docker container and return the result."""

try:

container = self.client.containers.get(container_name)

exec_result = container.exec_run(

'dotnet /Lean/QuantConnect.Lean.Launcher.dll',

stdout=True,

stderr=True,

stream=True

)

# log_line = exec_result.output.decode().strip()

time.sleep(2)

for log in exec_result.output:

log_line = log.decode('utf-8').strip()

self.logs.append(log_line)

time.sleep(2)

# Optionally: Check container logs again to see if anything was missed

additional_logs = container.logs().decode('utf-8').strip().splitlines()

for log_line in additional_logs:

self.logs.append(log_line)

# Combine all logs into a single string (if needed)

full_log = "\n".join(self.logs)

# Adjust this based on the WebSocket server's limits

# Split the full log into chunks

chunk_size = 1000

chunks = [full_log[i:i+chunk_size] for i in range(0, len(full_log), chunk_size)]

# Optional: Small delay to avoid overwhelming the WebSocket server

# Send each chunk separately

for i, chunk in enumerate(chunks):

message = {

'success': True,

'data': chunk,

'last_chunk': i == len(chunks) - 1 # Set this to True for the last chunk

}

await self.send_message(message)

await asyncio.sleep(0.1)

except DockerException as e:

await self.send_message({'success': False, 'error': f"Error running Lean engine: {str(e)}"})

return False

async def send_message(self, message):

"""Helper method to send a message over WebSocket."""

await self.send(json.dumps(message))

I will explain the sequence of events that are happening here.

- Initialize of the essential variables

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.client = docker.from_env() # Initialize Docker client

self.is_execution_complete = False

self.previous_results = None # To store results of the previous execution

self.logs = []

- Connect->Disconnect->Receive messages

- Receive is essentially the function that receive the strategy_id from the sender and run the next custom function which holds the actual logic

async def receive(self, text_data):

data = json.loads(text_data)

strategy_id = data.get('strategy_id')

if strategy_id:

await self.run_backtest(strategy_id)- This function does couple of sub tasks

- It checks whether data is already been processed if yes then return the previous results

- With the strategy_id received it receives the strategy config from the database

- With the strategy data received it then creates the config object to be replaced in the file present in the image container

- Write this config object to a temp file and copy this file content to the real file in the container

- Then after the config file is in place, it executes the run command

async def run_backtest(self, strategy_id):

try:

# Check if the execution is already complete

if self.is_execution_complete:

await self.send_message({

'success': True,

'data': self.previous_results,

'message': 'Backtest already completed, returning previous results.'

})

return

# Get the strategy configuration

strategy_data = await self.fetch_strategy_config(strategy_id)

print(f"strategy_data--------------------------->{strategy_data}")

if not strategy_data:

return

# Generate the config data for the backtest

config_data = self.generate_config_data(strategy_data)

# Write the config.json file to a temporary location

temp_config_path = '/tmp/config.json'

self.write_config(temp_config_path, config_data)

# Copy the config.json into the Docker container

container_name = 'lean-engine'

config_file_path_in_container = '/Lean/config.json'

if not await self.copy_file_to_container(temp_config_path, container_name, config_file_path_in_container):

return

# Run the Lean engine with the updated config.json

if not await self.run_lean_engine(container_name):

return

self.is_execution_complete = True # Set the flag after successful execution

await self.send_message({'success': True, 'data': 'Backtest completed successfully.'})

except Exception as e:

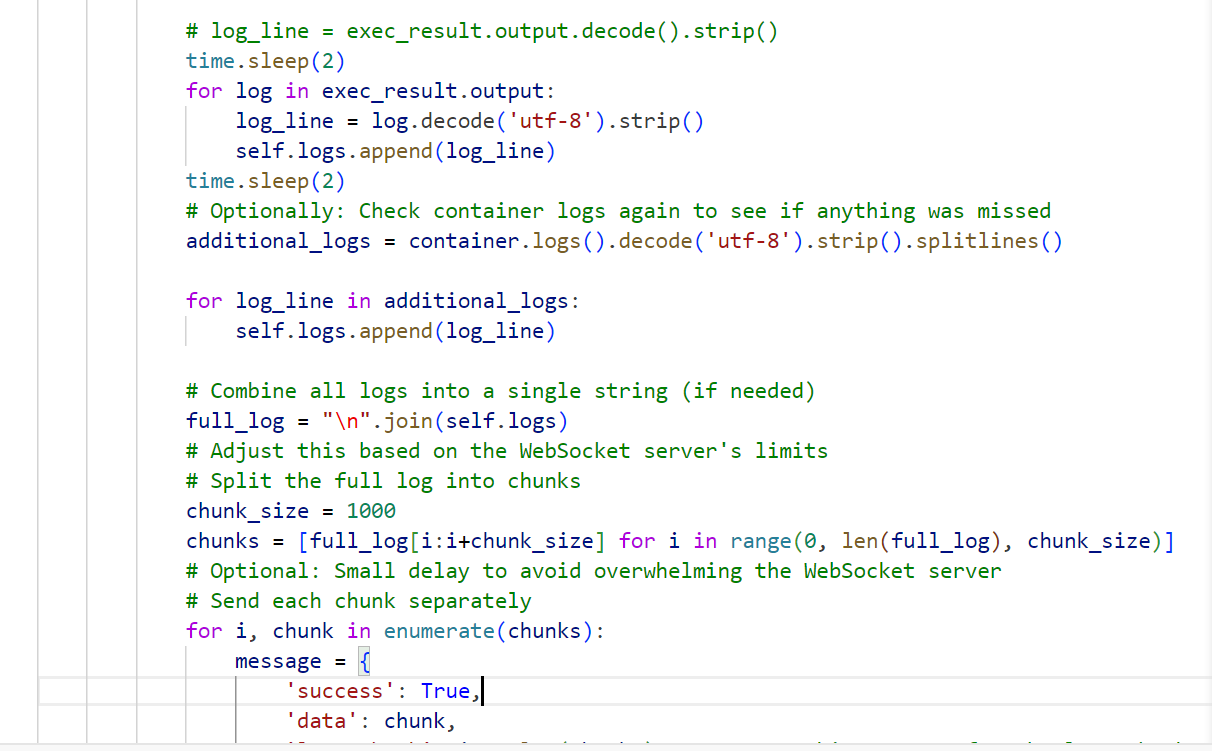

await self.send_message({'success': False, 'error': f'An error occurred: {str(e)}'})- Now inside run_lean_engine after the execution happens, the results are gathered in the array container

- Combine everything in an array in a single string and create chunks of the strings in a for loop.

- Send each chunk separately

- This takes care if the string is too long

async def run_lean_engine(self, container_name):

"""Run the Lean engine DLL inside the Docker container and return the result."""

try:

container = self.client.containers.get(container_name)

exec_result = container.exec_run(

'dotnet /Lean/QuantConnect.Lean.Launcher.dll',

stdout=True,

stderr=True,

stream=True

)

# log_line = exec_result.output.decode().strip()

time.sleep(2)

for log in exec_result.output:

log_line = log.decode('utf-8').strip()

self.logs.append(log_line)

time.sleep(2)

# Optionally: Check container logs again to see if anything was missed

additional_logs = container.logs().decode('utf-8').strip().splitlines()

for log_line in additional_logs:

self.logs.append(log_line)

# Combine all logs into a single string (if needed)

full_log = "\n".join(self.logs)

# Adjust this based on the WebSocket server's limits

# Split the full log into chunks

chunk_size = 1000

chunks = [full_log[i:i+chunk_size] for i in range(0, len(full_log), chunk_size)]

# Optional: Small delay to avoid overwhelming the WebSocket server

# Send each chunk separately

for i, chunk in enumerate(chunks):

message = {

'success': True,

'data': chunk,

'last_chunk': i == len(chunks) - 1 # Set this to True for the last chunk

}

await self.send_message(message)

await asyncio.sleep(0.1)

except DockerException as e:

await self.send_message({'success': False, 'error': f"Error running Lean engine: {str(e)}"})

return False- The receiving function receives the chunks of arrays, which is converted into a dictionary and keep on adding to a buffer untill the last_chunk is received.

- After that it is sent to the function that parses it and gives the result back

This was all about the technical aspect of the flow of the data between two services and lean-engine to receive the result.

How I created this Lean Engine

This is basically the docker image that recreates the directory structure from lean repository so that all the files are in place and the command could be executed.

I will explain the docker file.

# Stage 1: Mono installation in an isolated stage using Ubuntu

FROM ubuntu:20.04 AS mono-env

# Set environment variable to avoid interactive prompts during installation

ENV DEBIAN_FRONTEND=noninteractive

ENV LD_LIBRARY_PATH=/usr/local/lib:/usr/lib/mono:/usr/lib/x86_64-linux-gnu

# Install dependencies for Mono and libjpeg8

RUN apt-get update && \

apt-get install -y wget gnupg dirmngr ca-certificates apt-transport-https \

libjpeg8 libpng-dev libtiff-dev libgif-dev && \

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF && \

echo "deb https://download.mono-project.com/repo/ubuntu stable-focal main" | tee /etc/apt/sources.list.d/mono-official-stable.list && \

apt-get update && \

apt-get install -y mono-complete && \

apt-get clean && rm -rf /var/lib/apt/lists/*

# Find and set the correct path for libmonosgen-2.0.so.1

RUN find / -name "libmonosgen-2.0.so.1" 2>/dev/null

# Stage 2: Python and .NET setup in another isolated stage using Ubuntu

FROM ubuntu:20.04 AS python-dotnet-env

# Set environment variables

ENV DEBIAN_FRONTEND=noninteractive

ENV DOTNET_CLI_TELEMETRY_OPTOUT=1

ENV LC_ALL=C.UTF-8

ENV PYTHONNET_RUNTIME=coreclr

ENV PYTHONNET_PYDLL=/usr/local/lib/libpython3.8.so

ENV LD_LIBRARY_PATH=/usr/local/lib:/usr/lib/mono:/usr/lib/x86_64-linux-gnu

# Set PYTHONPATH to ensure the Lean Python modules are accessible

ENV PYTHONPATH=/Lean/Algorithm.Python:/Lean/Launcher

# Install Mono to ensure libmono is available

RUN apt-get update && \

apt-get install -y wget gnupg dirmngr ca-certificates apt-transport-https \

libjpeg8 libpng-dev libtiff-dev libgif-dev && \

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF && \

echo "deb https://download.mono-project.com/repo/ubuntu stable-focal main" | tee /etc/apt/sources.list.d/mono-official-stable.list && \

apt-get update && \

apt-get install -y mono-complete && \

apt-get clean && rm -rf /var/lib/apt/lists/*

# Install dependencies for Python and .NET SDK

RUN apt-get update && \

apt-get install -y build-essential libssl-dev zlib1g-dev libncurses-dev \

libffi-dev libsqlite3-dev libreadline-dev libbz2-dev liblzma-dev && \

apt-get clean && rm -rf /var/lib/apt/lists/*

# Download and install Python 3.8

RUN wget https://www.python.org/ftp/python/3.8.10/Python-3.8.10.tgz && \

tar xzf Python-3.8.10.tgz && \

cd Python-3.8.10 && \

./configure --enable-optimizations --enable-shared && \

make altinstall && \

rm -rf /Python-3.8.10.tgz /Python-3.8.10

# Verify that libpython3.8.so exists

RUN find /usr/local/lib -name "libpython3.8.so" -print || echo "libpython3.8.so not found!"

RUN chmod 755 /usr/local/lib/libpython3.8.so

# Verify that the library and environment variables are correctly set up

RUN ls -l /usr/local/lib # Check that the library file is in the expected location

RUN env # List all environment variables to verify they are set correctly

# Run a simple test to check if the library can be loaded

RUN python3.8 -c "import ctypes; ctypes.CDLL('/usr/local/lib/libpython3.8.so')"

# Install pip for Python 3.8

RUN python3.8 -m ensurepip --upgrade && \

python3.8 -m pip install --upgrade pip setuptools wheel

# Install Python.NET

RUN python3.8 -m pip install pythonnet==2.5.2

# Install necessary Python packages like pandas

RUN python3.8 -m pip install pandas numpy matplotlib scipy quantconnect

# Verify Python.NET installation

RUN python3.8 -m pip show pythonnet # <-- Add this line here to verify installation

# Debugging: Check the PythonNET_PYDLL value and file existence

RUN echo "PYTHONNET_PYDLL is set to: $PYTHONNET_PYDLL" && ls -l $PYTHONNET_PYDLL

# Add the test script

RUN echo "import clr; clr.AddReference('System'); from System import DateTime; print('Current DateTime from .NET:', DateTime.Now)" > test_pythonnet.py

# Run the test script

RUN python3.8 test_pythonnet.py

# Stage 3: Combine Mono and Python/.NET environments and build the project using the .NET SDK

FROM mcr.microsoft.com/dotnet/sdk:6.0-focal AS build-env

# Set environment variables

ENV DOTNET_CLI_TELEMETRY_OPTOUT=1

ENV LC_ALL=C.UTF-8

ENV PYTHONNET_PYDLL=/usr/local/lib/libpython3.8.so

ENV LD_LIBRARY_PATH=/usr/local/lib:/usr/lib/mono:/usr/lib/x86_64-linux-gnu

# Set PYTHONPATH to ensure the Lean Python modules are accessible

ENV PYTHONPATH=/Lean/Algorithm.Python:/Lean/Launcher

# Reinstall libselinux1 to fix the library version issue

RUN apt-get update && \

apt-get install --reinstall -y libselinux1

# Set the working directory

WORKDIR /Lean

# Copy Mono installation from the first stage

COPY --from=mono-env /usr/lib/mono /usr/lib/mono

COPY --from=mono-env /usr/lib/cli /usr/lib/cli

COPY --from=mono-env /usr/lib/x86_64-linux-gnu /usr/lib/x86_64-linux-gnu

COPY --from=mono-env /usr/lib/libmonosgen-2.0.so.1 /usr/lib/libmonosgen-2.0.so.1

COPY --from=mono-env /usr/lib/x86_64-linux-gnu/libmono* /usr/lib/x86_64-linux-gnu/

COPY --from=mono-env /usr/lib/libmono* /usr/lib/

COPY --from=mono-env /usr/bin/mono* /usr/bin/

COPY --from=mono-env /usr/share/mono /usr/share/mono

# Copy Python and .NET setup from the second stage

COPY --from=python-dotnet-env /usr/local /usr/local

# Set the PYTHONPATH environment variable

ENV PYTHONPATH=/Lean/Algorithm.Python

RUN rm -f /Lean/Algorithm.Python/MovingAverageAlgorithm.py

# Ensure the old files are removed

RUN rm -rf /Lean/Algorithm.Python/DynamicMovingAverageAlgorithm.py

# Copy the Lean directory from the local machine to the Docker container

COPY ./Lean /Lean

# After installing everything, verify the environment

RUN echo "import sys; print(sys.path)" > /Lean/Algorithm.Python/test_env.py

RUN python3.8 /Lean/Algorithm.Python/test_env.py

# Verify that the algorithm file exists

RUN ls -l /Lean/Algorithm.Python/DynamicMovingAverageAlgorithm.py

# Verify that the project files are in the correct location

RUN ls -l /Lean || true

# Verify that the .NET SDKs are installed correctly

RUN dotnet --list-sdks

# Verify that Python.NET is installed

RUN python3.8 -m pip list | grep pythonnet

# Restore NuGet packages

RUN dotnet restore /Lean/QuantConnect.Lean.sln

# Build the solution, including all C# projects

RUN dotnet build QuantConnect.Lean.sln -c Release --output /Lean/build

# Copy config.json to the build directory

COPY config.json /Lean/build/

# Use the same .NET SDK image for running the application to keep the environment consistent

FROM mcr.microsoft.com/dotnet/sdk:6.0-focal

# Copy Python installation from the second stage

COPY --from=python-dotnet-env /usr/local/bin/python3.8 /usr/local/bin/python3.8

COPY --from=python-dotnet-env /usr/local/lib/libpython3.8* /usr/local/lib/

COPY --from=python-dotnet-env /usr/local/include/python3.8 /usr/local/include/python3.8

COPY --from=python-dotnet-env /usr/local/lib/python3.8 /usr/local/lib/python3.8

COPY --from=python-dotnet-env /usr/local/bin/pip3 /usr/local/bin/pip3

# Set environment variables

ENV DOTNET_CLI_TELEMETRY_OPTOUT=1

ENV LC_ALL=C.UTF-8

ENV PYTHONNET_PYDLL=/usr/local/lib/libpython3.8.so

ENV PYTHONPATH=/Lean/Algorithm.Python:/Lean

ENV LD_LIBRARY_PATH=/usr/local/lib:/usr/lib/mono:/usr/lib/x86_64-linux-gnu

COPY --from=mono-env /usr/lib/mono /usr/lib/mono

COPY --from=mono-env /usr/lib/libmono* /usr/lib/

COPY --from=mono-env /usr/lib/libmonosgen-2.0.so.1 /usr/lib/libmonosgen-2.0.so.1

COPY --from=mono-env /usr/lib/x86_64-linux-gnu/libmono* /usr/lib/x86_64-linux-gnu/

COPY --from=mono-env /usr/bin/mono* /usr/bin/

COPY --from=mono-env /usr/share/mono /usr/share/mono

# Set the working directory

WORKDIR /Lean

# Create the Cache directory

RUN mkdir -p /Lean/Cache

RUN echo "dummy cache file" > /Lean/Cache/dummy.txt

# Copy the built files from the previous stage

COPY --from=build-env /Lean /Lean

COPY --from=build-env /Lean/build /Lean/

# COPY --from=build-env /Lean/build/*.dll /Lean/

# COPY --from=build-env /Lean/build/*.exe /Lean/

# COPY --from=build-env /Lean/build/*.json /Lean/

# Copy the rest of the Lean directory (including Python scripts)

COPY --from=build-env /Lean/Algorithm.Python /Lean/Algorithm.Python

# Set permissions for the MovingAverageCrossAlgorithm.py file

RUN chmod 644 /Lean/Algorithm.Python/DynamicMovingAverageAlgorithm.py

# Verify the config.json file is in the final build directory

RUN echo "Checking if config.json is in the final /Lean/build directory:" && ls -l /Lean/build/config.json

RUN echo "Checking if DynamicMovingAverageAlgorithm.py is in the final /Lean/Algorithm.Python directory:" && ls -l /Lean/Algorithm.Python

# Verify the contents of the root directory

RUN echo "Checking contents of /Lean directory:" && ls -l /Lean

# Add debugging steps

# Print environment variables

RUN echo "Printing environment variables before running Lean:" && env

# List the contents of critical directories

RUN echo "Listing /usr/local/lib directory:" && ls -l /usr/local/lib

RUN echo "Listing /Lean/Algorithm.Python directory:" && ls -l /Lean/Algorithm.Python

# Verify that the Python modules are in the expected location

RUN echo "Python paths:" && python3.8 -c "import sys; print(sys.path)"

RUN ls -l /Lean/Algorithm.Python

# Verify that the project files are in the correct location

RUN ls -l /Lean

# # Add a script to test Python.NET integration

# RUN echo "import clr; clr.AddReference('System'); from System import DateTime; print('Current DateTime from .NET:', DateTime.Now)" > test_pythonnet.py

# RUN python3.8 test_pythonnet.py

# Define the entry point for the Docker container

CMD ["dotnet", "/Lean/QuantConnect.Lean.Launcher.dll"]- Install Mono to ensure libmono is available, this lib is required to install pythonet in later layers which is the connecting link between python and dot net.

- Install dependencies for Python and .NET SDK

- Download and install Python 3.8

- Install pip for Python 3.8

- Install Python.NET

- Install necessary Python packages like pandas

- Combine Mono and Python/.NET environments and build the project using the .NET SDK

- I have copied all the build files from previous layer to final so that all the packages are available in final layer

This image is built and I am using this image in docker compose to build the lead-engine.

I hope the explanation finds an understanding in your head.