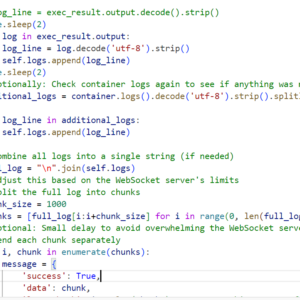

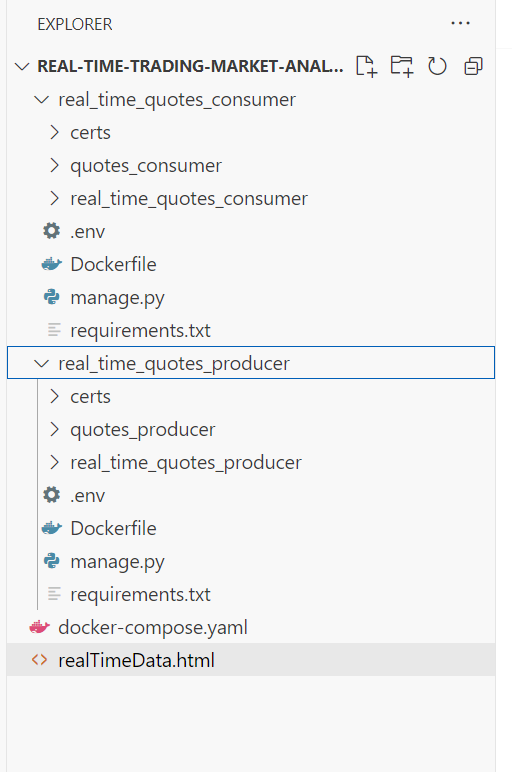

Lately I have been attracted to trading and risk management domain, have always found the financial sector interesting. This is specially interesting because I am trying to capture live data live, no database involved. The flow is quite simple.

There is data producer which captures the data from finnhub a website that provides statistical data required for making trading decisions. Market Data Feed Handlers– That’s what I call this module. I opted for this because it gives free data, generally clients would prefer major stock exchanges to fetch data.

In this module, I am capturing three kinds of data, real-time quotes, stock candles for charting and tick data. This sound alien first but then I understood everything. This module ie basically fetching real-time quotes.

So, the components of a system is a—

- Kafka Producer that connects to the website and sends that to the topic

- This producer is inculcated in a viewset and that viewset is called during initialization of the app within a cron that runs 5 minutes gap

- Inside the consumer the data from topic which is consumed is broadcasted to the wesocket

- This end point can be called by the UI to show real time data

There would be separate module for three types of data. The plan is to

- After fetching the tick data and stock candles data there would parsing and normalization of the data – one service for that

- This will produce to another service that would store the data in the database in proper format

- Then comes the distribution stage. I am still researching this part

Today, I just jotted down the starter code for the flow, will implement the same in some days.