24th July was all about Kafka, and here now its 25th July. I started at 10.00 pm night and wrappd this up now. So, I thought of inculcating Kafka as the message broker for logging the logs onto the database. Why not try a complicated once. I did had a question recently as to how to maintain data consistency in Kafka, to which I had replied out of the blue that we have to adjust the offset. Although I was kind of skeptical on this answer but then I remember that video I watch about the kafka architecture.

That video had a tons of stuffs about offset and round robin like that.

So, here when I replaced the code with Kafka I was consistently getting 500 internal server error with some alien description which had me see the line of issue but honestly that where the issue had occurred due to issue in some other place, and that was not the point of issue.

So, my way of debugging stuffs took time to fructify but at last it worked, here is the bunch of stuffs I did

- — Check all messages are delivered to Kafka

- — Configure logs to supress kafka debug logs and show application debug logs

- — Profile the main function to check which is taking time

- — Solved the 500 error

- — Implemented singleton pattern for kafka and connection pool to manage connections

- — Using httpx which can handle both sync and async request so as you spare yourself getting coroutines instead of response object

- — Increasing the time out for the gunicorn command

- — handling when broker was not available

Acttually since this was interservice communication so, if one service is returning negative response the caller should be able to handle that lest it would throw 500 internal server error.

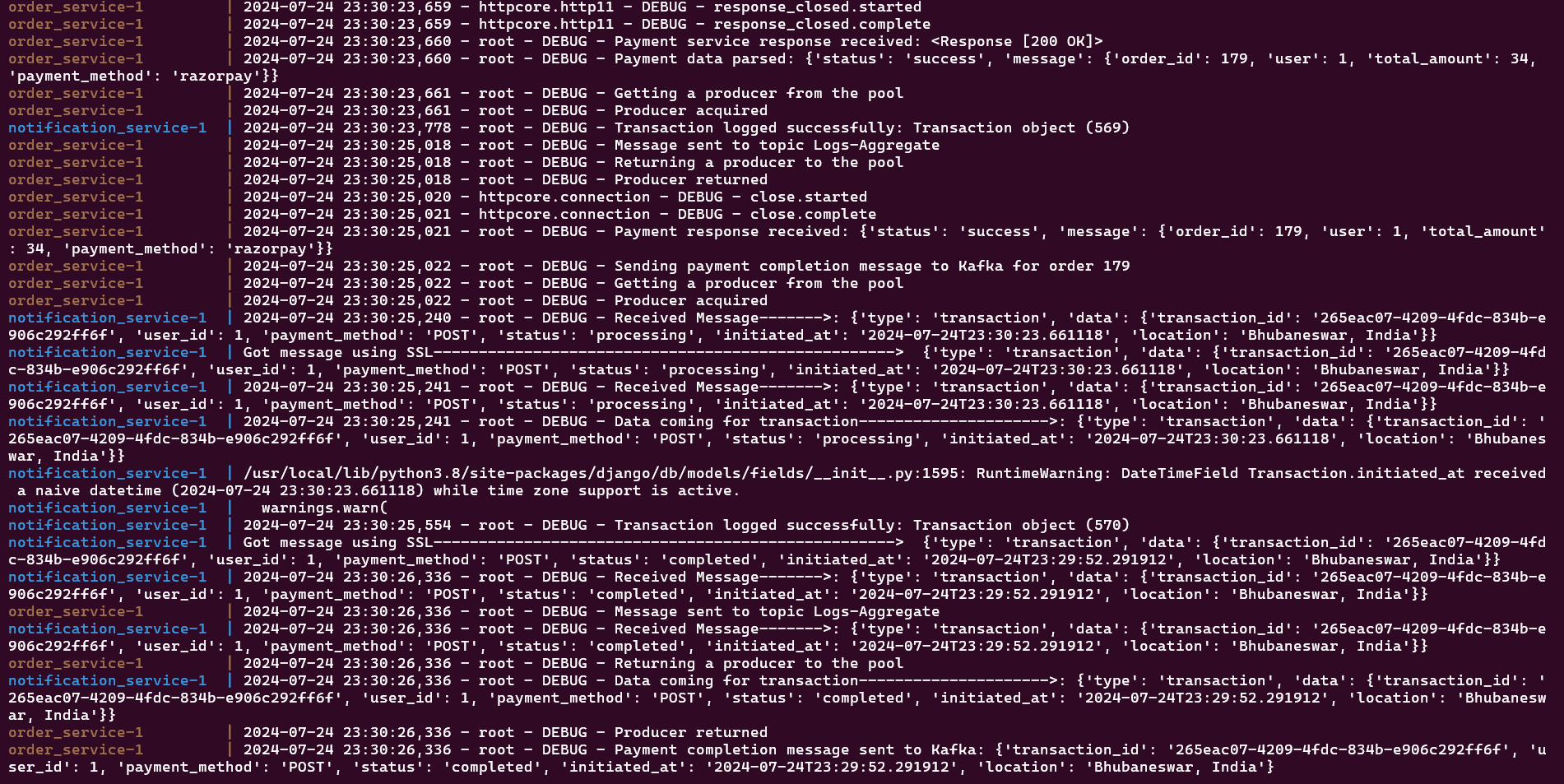

Check all messages are delivered to Kafka

This can be ensured by configuring the consumer and producer instance such that it get acknowledgment from all the nodes of kafka and I has spawn 3 instance of node on cloud that hosted the kafka.

Configure logs to supress kafka debug logs and show application debug logs

Looks like Kafka people were doing some debugging as they enabled lots of debug messages and I had to supress the same with my application logs and kafka logs inside my application, it also helped in figuring out the profiling statements as well.

I must say logs are a powerful tool in debugging stuffs and structuring the logs are even more important where we filter irrelevant ones.

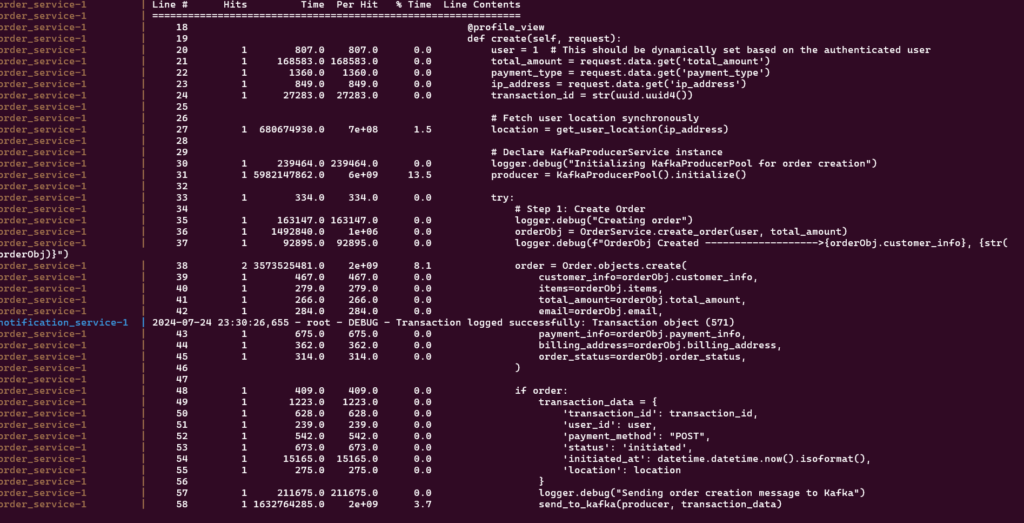

Profile the main function to check which is taking time

This is the next powerful tool which helped in figuring out which line of the function was taking time and how may times the execution is hit.

I used lined-profiler to analyse parts of code. Here is a snipet how the statistics looks like

Instantiation of kafkaproducer and sending the logs were taking a whole lot of time. This let to think of next step.

Implemented singleton pattern for kafka and connection pool to manage connections

This is a design pattern which instantiates only one instance of the class no matter how many times its called. This helped in decreasing the execution time because in the nested flow it does not hve to instantiate again and again.

Plus I added connection pools so that kafka connections could be rotated instead of creating a new one everytime we instantiate.

Using httpx which can handle both sync and async request so as you spare yourself getting coroutines instead of response object

There have been times especially in negative scenarios where retry mechanism trigger, when you are seeing on the logs that kafka is trying to so some work on the code and simultanously there is a http request to be made as per retry logic to call the external service. Clashing of this even result in 500 internal server error. Used httpx , a power ful tool to handle the situation.

Apart from that added retry mechanism during consumer instantiation as well, which handles when broker is unavailable at the moment and increased the time out of command that runs the server to allow kafka some time to do its thing so that the next request in the queue initiates.