Just like me when you would be starting with Kubernetes, there would be a lot of unanswered questions to fix ingress traffic flow. In this post, we will address few of them related to ingress traffic flow in a Kubernetes cluster.

For this I assume you already have a Kubernetes cluster on your favorite cloud platform.

Here are few scenarios.

You have ingress and service with LB. When traffic coming from outside , you are confused if it hits ingress first and then does it goes to pods directly using ingress LB or it goes to service and get the pod Ip via selector and then goes to pods?

Which kind, services or ingress uses readiness Probe in the deployment?

How ingress traffic reaches the cluster.

In order to fix deployment problems, we have to know how to trace the traffic flow and for that we have to know how the stuff works.

There are two things you want to understand- deployment and services.

While services are a collection of pods which is identified as an IP address, deployment shows the current state of the pod. Any change you want to make inside the cluster is done by deployment, we have terms like roll out updated code or roll back previous version of deployment as well. A service is responsible for enabling network access to a set of pods

Again, the default service types in Kubernetes are ClusterIP, NodePort, and LoadBalancer. Here in our project, we are using ClusterIP.

Using a deployment allows you to easily keep a group of identical pods running with a common configuration.

Next comes the loadbalancer service or the ingress container, either one you can use to route external requests to the cluster and hence the pods.

The case of usual loadbalancer

- A Kubernetes load balancer is the default way to expose a service to the internet. On GKE for example, this will spin up a network load balancer that will give you a single IP address that will forward all traffic to your service

- Support a range of traffic types, including TCP, UDP and gRPC

- Each service exposed by a Kubernetes load balancer will be assigned its own IP address (rather than sharing them like ingress)

- A Kubernetes network load balancer points to load balancers which reside external to the cluster—also called Kubernetes external load balancers.

The case of Ingress controller

- Kubernetes ingress sits in front of multiple services and acts as the entry point for an entire cluster of pods.

- Ingress allows multiple services to be exposed using a single IP address. Those services then all use the same L7 protocol (such as HTTP).

- A Kubernetes cluster’s ingress controller monitors ingress resources and updates the configuration of the server side according to the ingress rules.

- The default ingress controller will spin up a HTTP(s) Load Balancer. This will let you do both path-based and subdomain-based routing to backend services (such as authentication, routing).

Generally speaking, below is the traffic flow:

Internet ←-> External/AWS ELB ←-> k8s ingress ←-> k8s service ←-> k8s pods

Now that we know the basics, we can move ahead to trouble shoot our pods.

I will give you a hierarchy of how you should go about troubleshooting for RCA.

curl http://www.amazonish.test/

--> DNS Resolution

--> Ingress Controller: host: www.amazonish.test | path: /

--> amazonishv2app-svc:appPort

--> one of the pods associated with the serviceHow does that work?

- DNS resolution happens and retrieves the IP address associated with the domain name

- In this project, I have set the IP of the ingress load balancer to a local domain in the hosts file, in other case, we may get a SSL certified domain and configure the DNS in the cluster.

- The request goes to that IP address

- The request hits the ingress controller (Nginx)

- The ingress controller looks at the path and retrieve the associated service

In our case, the path is/and the service isamazonishv2app-svc - The service sends the request to one of the pods that it handles

- Your api/app handles the request

The troubleshooter approach

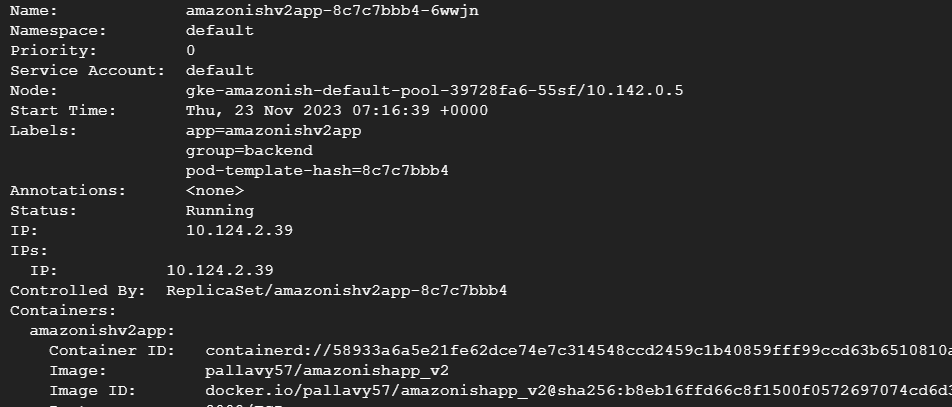

Check the pods

- Get the pods

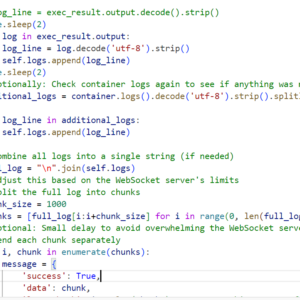

- See the logs

- Access the pod directly using kubectl port-forward and access it through localhost in another shell

kubectl get pod -owide

kubectl logs pod_name

kubectl port-forward pod_name 8000:8000

curl localhost:5000On executing the curl you should be able to see expected content, if that is so then the root cause(RC) is not something internal to the application.

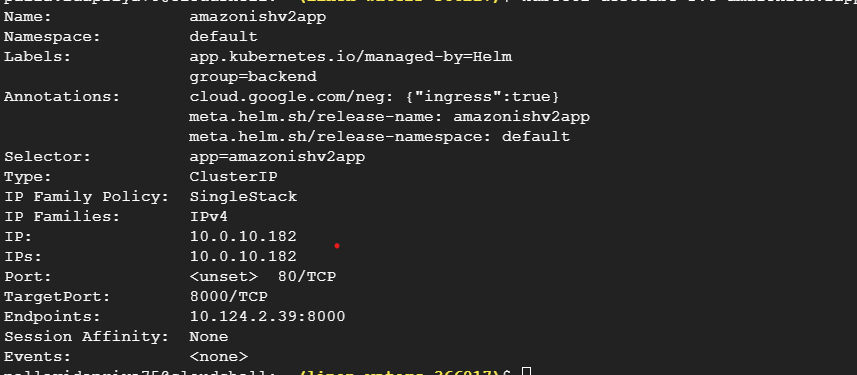

Check the Service

- Get the svc

- The

Selectormust match pod label - The

TargetPortmust match the port you are using in your pod - The

Endpointssection must contain the IP address of your pod.

- The

- See the logs

- Access the service directly using kubectl port-forward and access it through localhost in another shell

On executing the curl you should be able to see expected content, if that is so then the root cause(RC) is not at this level.

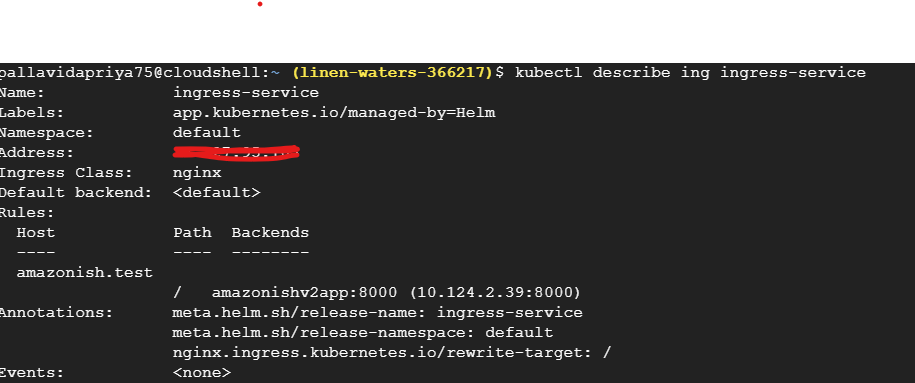

Check the ingress

- Get the ingress

- The

Hostis the one you’re using - The

Serviceis the one we checked previously - The

Portis the same used in the service declaration

- The

- See the logs

- Then

execon the pod to curl locally through the ingress controller. As the host and the path are used to route traffic, you will need to specify both. `curl -H “Host: amazonish.test” localhost/”

kubectl exec -it ingress-nginx-controller-6bf7bc7f94-zgms5 -- shOn executing the curl you should be able to see expected content, if that is so then the root cause(RC) is not at this level.

Check the DNS

You need to ensure that the DNS Resolution gives the external ip of your LoadBalancer use by the ingress controller.

If it doesn’t return the correct IP, then the DNS for “example.com” is set to an incorrect CNAME or the CNAME entry is not set.

Pointers related to the error codes

503 Service UnavailableIt tells us that our we are making it to our ingress and the Nginx ingress loadbalancer is trying to route it but it is saying there is nothing to route it to. When you receive this message, this usually means that no pods associated with this service is in a ready state. You should check if the pods are in a ready state to serve traffic.

Here I am describing the ingress service.

The backend column for the ingress shows what service this ingress is associated to and the port. We should at this point make sure this is correct and this is where we want the traffic to go.

Next let’s go to the service level. The first thing we want to check is the “endpoint”, this is essentially the IP of the pod.

If there are no endpoint, then the service has nothing to send the traffic to and that is the problem. At this point you should look at the service Selector to make sure that this is the correct service Selector. The service Selector selects is a set of labels that are used to select which pods are in this service. This means that the pods you want associated with this service should have the same labels as well.

So, the selector of the service should match with the label of the connected pod. If all is well here and we don’t have the end point at the service level then we want to check if the pod is in ready state.

The next thing you should check is if the ports are matching to what you expected. The incoming ports are going to the correct target pod destination.

That’s all you should be doing to troubleshoot Kubernetes cluster. You can also check connected posts here.

Excellent goods from you, man. I have understand your stuff previous to and you’re just too fantastic.

I really like what you have acquired here, really

like what you are saying and the way in which you say it.

You make it enjoyable and you still care for to keep

it smart. I cant wait to read far more from you.

This is really a wonderful website.

casino en ligne francais

I blog often and I seriously thank you for your information. This article

has really peaked my interest. I’m going to take a note of your

site and keep checking for new details about once a week.

I opted in for your RSS feed too.

casino en ligne

Great items from you, man. I’ve keep in mind your stuff previous

to and you’re just too fantastic. I actually like what you

have acquired here, really like what you are stating and the best way through which you assert it.

You make it enjoyable and you continue to take care of to keep

it wise. I can not wait to read far more from you.

This is actually a wonderful web site.

casino en ligne

If you are going for finest contents like myself, simply visit this web page all the time for the reason that it presents quality contents, thanks

casino en ligne France

hello there and thank you for your info –

I have definitely picked up something new from right here.

I did however expertise some technical issues using this website,

as I experienced to reload the website lots of times previous to I could get it to load properly.

I had been wondering if your web host is OK? Not that I’m complaining,

but slow loading instances times will often affect your

placement in google and could damage your quality score

if ads and marketing with Adwords. Well I’m adding this RSS to my e-mail and

can look out for a lot more of your respective interesting content.

Ensure that you update this again very soon.

meilleur casino en ligne

you’re actually a just right webmaster. The site loading pace is amazing.

It kind of feels that you’re doing any distinctive trick.

Furthermore, The contents are masterwork. you’ve done a wonderful task in this

subject!

casino en ligne

Its such as you learn my mind! You appear to know so much approximately

this, like you wrote the ebook in it or something. I believe that you

just can do with a few percent to power the message home

a little bit, but other than that, this is magnificent blog.

A great read. I’ll definitely be back.

meilleur casino en ligne

Hi there, this weekend is nice for me, as this occasion i am reading

this fantastic informative paragraph here at my residence.

casino en ligne

Thanks for your personal marvelous posting!

I genuinely enjoyed reading it, you can be a great author.I will

be sure to bookmark your blog and will eventually come back in the foreseeable future.

I want to encourage that you continue your great posts,

have a nice weekend!

casino en ligne

This is a very good tip particularly to those new to the blogosphere.

Short but very precise information… Appreciate your

sharing this one. A must read post!

casino en ligne